REALISTIC MARINER MOVEMENT

ABOUT HALF WAY THROUGH THE SIX YEARS THIS PROJECT LASTED, WE UNDERTOOK A UNIQUE INVESTIGATION INTO HOW TO RECORD REALISTIC MOVEMENTS OF THE CREW OF A SAILING SHIP TO HELP SIMULATE THE ACTIVITIES OF THE VIRTUAL MAYFLOWER CREW.

One of the challenges faced by designers of VR recreations of historical scenes is how best to include believable representations of humans – avatars – whilst not compromising the computer’s run-time performance (which will happen if too many avatars of too high a level of visual realism, or fidelity, are attempted).

One of the techniques used to achieve this important trade-off is often used by the producers of computer-generated imagery (CGI) for cinematic productions. Here, detailed 3D humans are only used for close-up scenes; more distant avatars are very simplistic, in visual terms, but the illusion of reality is achieved by using recognisable motion patterns.

To generate believable movement sequences, developers use Motion Capture, or MOCAP techniques. For the early part of the Virtual Mayflower project (2017), we had access to a then-new piece of wearable MOCAP hardware called the Perception Neuron. The suit takes the form of a flexible ‘exoskeleton’ comprising small Inertial Measurement Units (IMUs) each containing a gyroscope, accelerometer and magnetometer. The suit can be worn over other clothing and the movement data collected from each IMU is transferred via a mini-USB cable to a lightweight laptop, contained within a low-profile backpack worn by the human participant.

In June of 2017, we were given an opportunity to undertake a truly unique trial of this technology as its wearer braved the wind and rain to scale the heights of a tall ship moored at London’s Canary Wharf.

Owned by the Jubilee Sailing Trust, the Lord Nelson is one of only two tall ships in the world specially designed to be sailed by a crew with wide range of physical abilities. The Trust is an international disability charity that promotes integration through the challenge and adventure of tall ship sailing.

Despite the appalling weather, Beth Goss, the ship’s Bosun’s Mate, managed to complete a 34m climb of the vessel’s main mast. The Perception Neuron suit recorded her every movement, including a range of actions the crew of the Mayflower would have carried out, such as transiting the yard arm and unfurling the lower topsail. The activities chosen were based on observations conducted by Professor Robert Stone whilst onboard the Mayflower II in Plymouth, Massachusetts, USA.

Beth also wore two 360o panoramic cameras (Nikon Keymission 360 and Ricoh Theta), to record her activities as she went aloft.

Unfortunately, in this case, the technology was not of a quality to have enabled us to produce reliable and consistent motion capture files, suitable for avatar movement programming. For example, although the Perception Neuron suit appeared to offer an ideal and relatively non-intrusive technique for recording human motion in unconventional settings (and the rigging of the Lord Nelson was certainly unconventional!), the method by which the device records motion was not ideal for that setting. The large amounts of metal present onboard the Lord Nelson affected the magnetometer component of the suit, causing drifting calibration issues, noise and, thus, inconsistent and unreliable motion records (such as twisting virtual ‘bone’ elements).

We found similar noise and motion capture errors, requiring an extensive amount of remedial modelling of the end result, in another project, where we used an optical motion capture MOCAP system (an OptiTrack Flex 13 MOTIVE 12-camera set-up) to track the movements of users wearing full-body suits endowed with small, high-contrast plastic spheres.

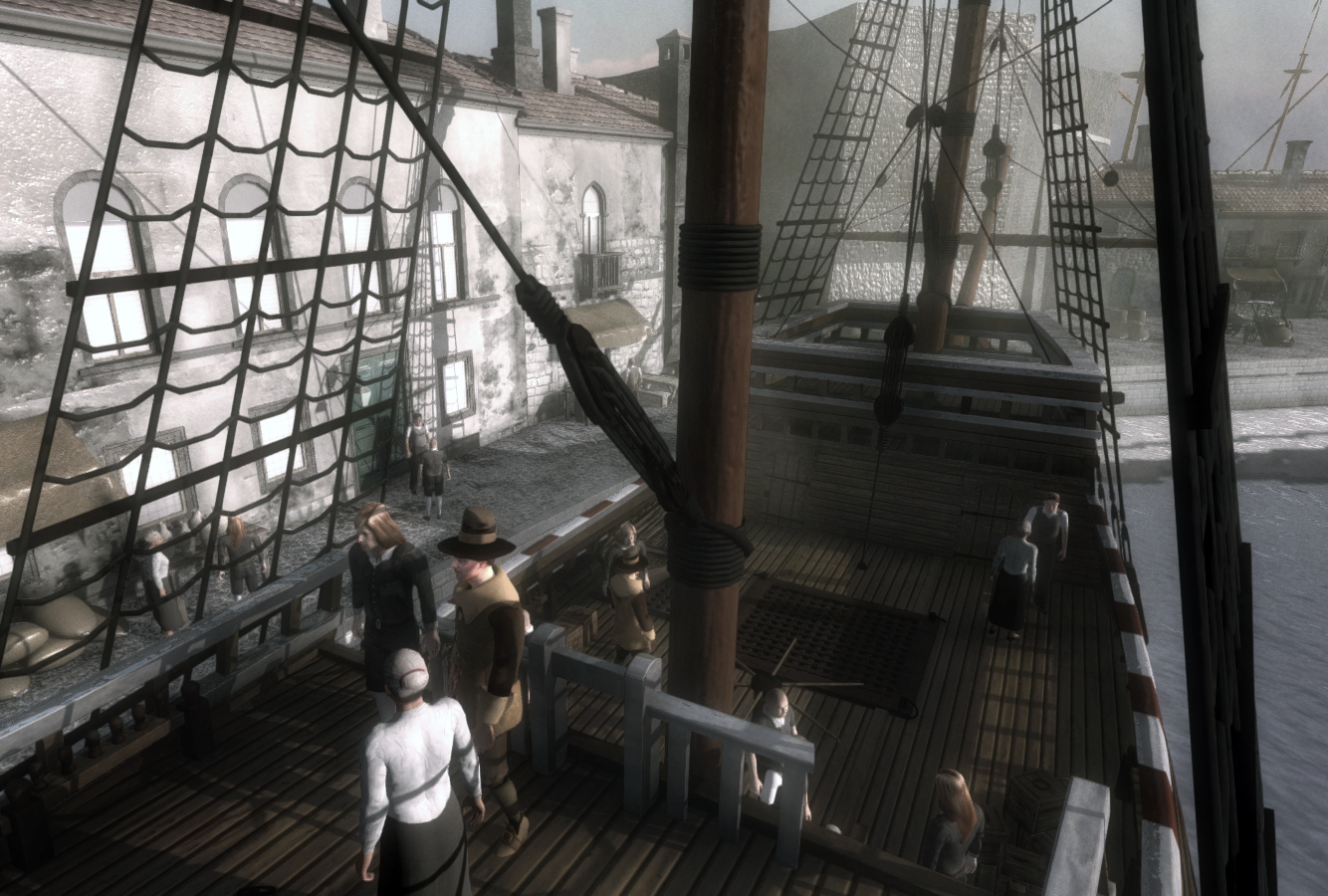

Consequently, we decided that the best approach to generating Pilgrim and Mayflower crew and passenger avatars, plus Barbican inhabitants in period costumes would be to seek the help of an experienced game designer. Mike Acosta, Lecturer at Royal Leamington Spa College and subject specialist in the field of Games Art stepped forward to perform that role. Ignoring the research required to identify clothing styles and on-land and on-ship activities for the Pilgrims and Mayflower crew, the process of avatar design is a complex one, involving many steps.

Fortunately, the 2016 visit to Plymouth Massachusetts proved invaluable in this respect. In summary:

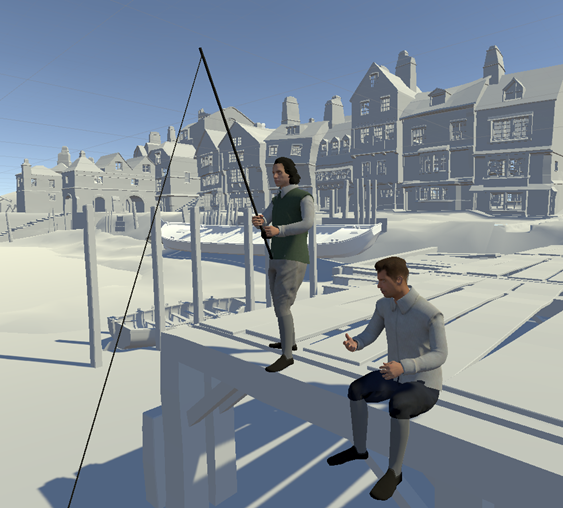

- “Meshes” representing each avatar were modelled using 3ds Max, ensuring that the mesh for each virtual human featured as low a number of polygonal (“low poly”) elements as possible. This included the faces of the male and female characters.

- Subsequently, a high-poly mesh was sculpted using the Pixlogic tool ZBrush (a digital sculpting tool with interactive styles similar to traditional sculpting, used in games design and CGI for movies).

- Clothing, based on styles identified during online research and from images taken during the visit to Plymouth and Plimoth Plantation in 2016, were digitally designed using Marvellous Designer (MD). MD is a popular 3D package used to create dynamic virtual clothing, again for animation in movies and games, and increasingly for VR experiences.

- Clothing was then retopologised in 3ds Max. When applied to virtual clothing, the result of retopologising is an effect where the garments appear to “cling” more realistically to the virtual human.

- Then, using Substance Painter, the high-poly character mesh is baked. Baking, in computer graphics terms, is a process where information from a high-poly mesh is transferred onto a second low-poly mesh and then saved as a texture. A texture digitally represents the surface of an object, including colour, lighting and more three-dimensional effects, such as transparency and reflectivity. Texture mapping is the process by which a texture is wrapped around a three-dimensional object. Textures representing the avatar faces were based on stock images, as, given limitations in resources, no facial animation was required for this project.

- Clothing items were also textured using Substance Painter.

- The final avatar representations were reassembled within 3ds Max.

- Finally, the avatars were animated, based on their intended function within the final ship or Barbican context. To do this a toolkit known as Mixamo was used. This supports the rigging of computer-generated humans and animals (endowing the models with primitive virtual ‘skeletons’) and was further used to generate the final animation sequences for the Pilgrims and Mayflower crew.

Once complete, the avatars were tested in non-textured versions of the virtual Mayflower, to ensure that the correct scaling factors had been adopted.